In the dynamic world of software development, DevOps has emerged as a game-changer, streamlining the lifecycle of applications from conception to deployment. The integration of DevOps practices with Artificial Intelligence (AI) projects propels this evolution further, offering unprecedented speed, efficiency, and scalability. Here, we explore 10 DevOps technologies that are particularly relevant for AI, each playing a pivotal role in transforming how we build, deploy, and manage AI-driven applications.

Kubernetes

Kubernetes, the leading container orchestration platform, offers a robust solution for deploying, scaling, and managing containerized AI applications. It handles the complexity of running containers at scale, ensuring that AI applications are highly available and accessible. Kubernetes automates various operational tasks such as load balancing, self-healing (automatic restarts), and rollbacks, which are essential for maintaining the performance and reliability of AI systems in production environments.

The scalability feature of Kubernetes is particularly beneficial for AI and machine learning workloads, which can be resource-intensive and fluctuate in demand. Kubernetes allows for the automatic scaling of applications based on the workload, ensuring that resources are efficiently utilized without manual intervention. This dynamic scaling capability supports the computational demands of training AI models and serving inference requests, facilitating faster iteration cycles and responsiveness.

Additionally, Kubernetes supports a variety of storage options, including persistent volumes, which are crucial for AI applications that require access to large datasets or need to store the state of models. With its comprehensive ecosystem and strong community support, Kubernetes not only simplifies the deployment and management of AI applications but also enables best practices such as microservices architecture and GitOps workflows, further enhancing the agility and efficiency of AI development processes.

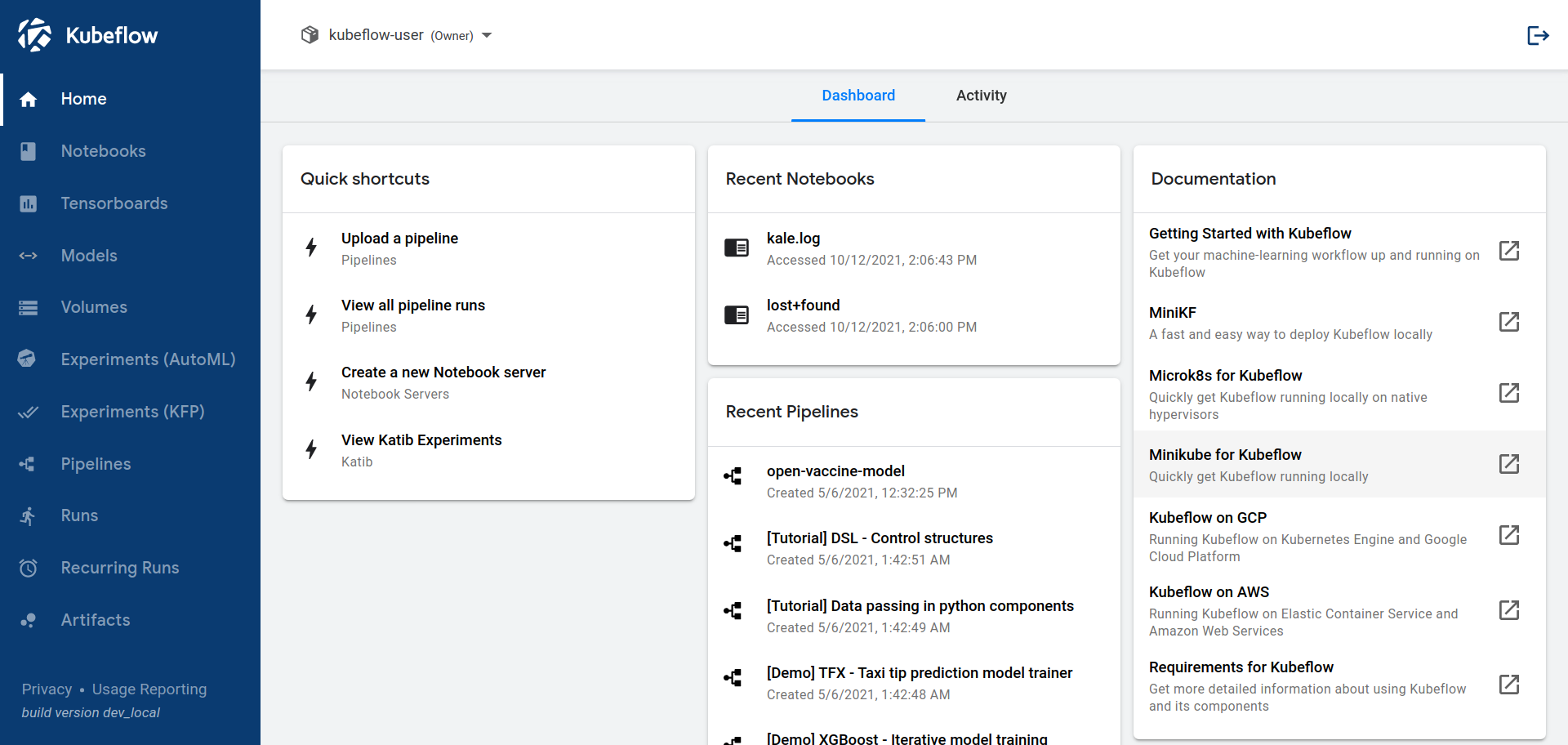

Kubeflow

Kubeflow emerges as a pivotal DevOps technology, particularly tailored for streamlining machine learning (ML) workflows on Kubernetes. Designed with the complexities of ML in mind, Kubeflow provides a comprehensive, open-source platform that simplifies the deployment, monitoring, and management of ML projects from experimentation to production. It encapsulates the full lifecycle of ML projects, offering tools for building ML models, orchestrating complex workflows, serving models, and managing datasets, all within the Kubernetes ecosystem. This seamless integration with Kubernetes leverages its scalability and reliability, ensuring that ML projects can easily be scaled up to meet demand and managed efficiently.

At its core, Kubeflow is about making ML workflows as simple and portable as possible. By leveraging containerization, Kubeflow allows data scientists and ML engineers to ensure consistency across diverse environments, from local development to cloud-based production systems. This portability solves many of the “works on my machine” problems that plague software development, including ML projects. Furthermore, Kubeflow’s modular architecture enables users to pick and choose the components they need for their project, whether it’s for data preprocessing, model training, hyperparameter tuning, or model serving, ensuring flexibility and customization to fit any ML project’s needs.

Moreover, Kubeflow enhances collaboration between data scientists, developers, and operations teams, facilitating a more efficient DevOps pipeline for ML projects. By automating many aspects of the ML workflow, Kubeflow allows teams to focus more on model development and less on the operational complexities. Its integration with other DevOps tools and practices, such as continuous integration and continuous deployment (CI/CD) pipelines, further streamlines the development process. This collaborative and integrated approach accelerates the delivery of ML projects, from conception to production, enabling organizations to leverage AI and ML capabilities more effectively and at a faster pace.

Apache Airflow

Apache Airflow is an open-source platform designed to programmatically author, schedule, and monitor workflows. In the context of AI development, Airflow serves as a backbone for managing complex data pipelines essential for training and deploying AI models. Its ability to define workflows as code enables data scientists and engineers to create reproducible and maintainable workflows, ensuring that data processing, model training, and other tasks are executed in a consistent and reliable manner.

Airflow’s scheduler executes tasks on an array of workers while following the specified dependencies between tasks. This is particularly useful for AI projects, where tasks often need to be executed in a specific order, such as data extraction, cleaning, preprocessing, model training, and evaluation. Airflow ensures that these tasks are run at the right time, in the right order, and under the right conditions, automating the workflow and freeing up data scientists to focus on model development and analysis.

Additionally, Airflow’s rich user interface provides visibility into the execution and status of workflows, making it easier for teams to monitor their data pipelines and debug issues when they arise. The platform’s extensibility, through a wide range of plugins and integrations, allows for customization and enhancement of workflows, catering to the unique requirements of AI projects. By automating and managing data pipelines with Airflow, teams can accelerate the development and deployment of AI models, ensuring they are built on a foundation of high-quality, consistent data.

JupyterHub stands as a transformative tool in the landscape of collaborative computing, enabling users to access, share, and manage Jupyter notebooks across a diverse range of environments. It’s designed to support many users simultaneously by hosting multiple instances of the single-user Jupyter notebook server. JupyterHub is particularly valuable in educational settings, research labs, and data science teams, where there is a need for a centralized platform that facilitates interactive, exploratory computing and data analysis. The platform simplifies the process of managing dependencies and environments for a multitude of users, ensuring that resources are efficiently allocated and that users can work independently or collaboratively on projects without interfering with each other’s work.

One of the key strengths of JupyterHub is its adaptability to various infrastructures, including cloud services, high-performance computing clusters, and even local networks. This flexibility makes it an ideal solution for organizations looking to provide a consistent computational environment to their teams, regardless of the underlying hardware or platform. By abstracting away the complexity of managing individual installations and dependencies, JupyterHub allows users to focus on their computational tasks, fostering innovation and productivity. Furthermore, its ability to integrate with authentication systems ensures secure access, allowing administrators to control user permissions and access levels, thereby safeguarding sensitive data and resources.

Moreover, JupyterHub enhances the collaborative capabilities of the Jupyter ecosystem, enabling users to share notebooks, data, and insights effortlessly within a secure environment. This collaboration is crucial for teams working on complex data analysis, machine learning models, and research projects, as it enables a more dynamic exchange of ideas and accelerates the discovery process. Additionally, JupyterHub supports a range of plugins and extensions, allowing customization and extension of its functionality to meet specific project or organizational needs. Whether it’s for deploying instructional material, conducting reproducible research, or developing data-driven applications, JupyterHub provides a robust, scalable platform that empowers users to harness the full potential of interactive computing and collaboration.

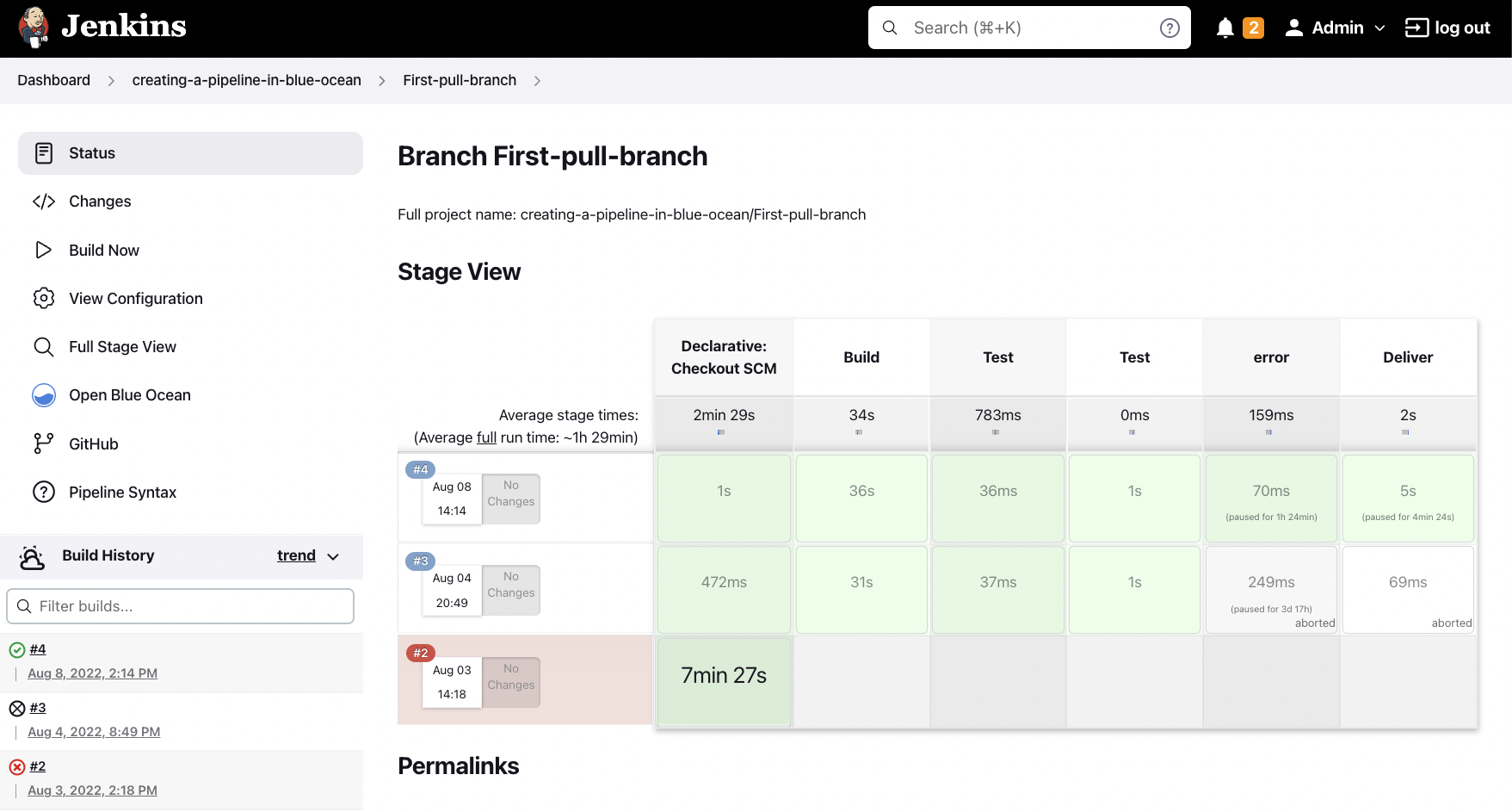

Jenkins

Jenkins, an open-source automation server, has become synonymous with continuous integration and continuous delivery (CI/CD) in the DevOps world. Its flexibility and extensive plugin ecosystem make it a valuable tool for automating the build, test, and deployment processes of AI projects. Jenkins enables teams to automate the entire lifecycle of AI model development, from data preprocessing to model training and validation, facilitating a smoother and more efficient development process.

One of the key advantages of Jenkins in the context of AI development is its support for continuous testing. AI models must be rigorously tested across various parameters and datasets to ensure their accuracy and reliability. Jenkins can automate these testing workflows, running them as part of the CI/CD pipeline whenever changes are made. This ensures that models meet the required standards before being deployed, reducing the risk of errors or poor performance in production.

Furthermore, Jenkins fosters collaboration among data scientists, developers, and operations teams by providing a shared platform for managing the development process. This collaborative environment is crucial for AI projects, which often require cross-disciplinary expertise to tackle complex challenges. By integrating Jenkins into the AI development workflow, teams can achieve faster iteration cycles, improved code quality, and more reliable AI applications, ultimately accelerating the path from experimentation to production.

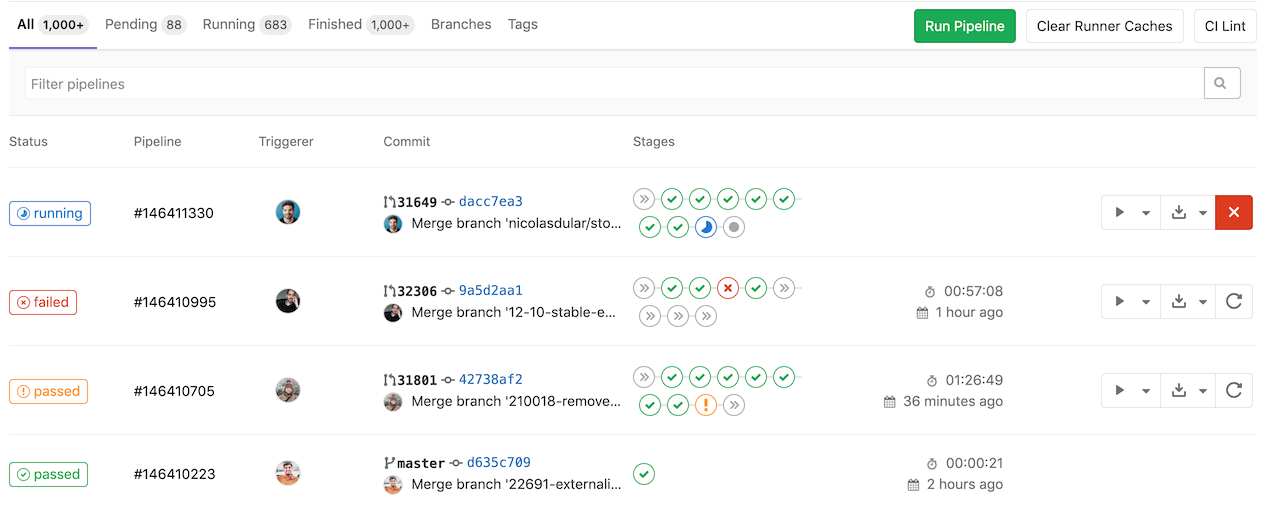

GitLab CI/CD

GitLab CI/CD is an integral part of the GitLab platform, offering a seamless experience for automating the stages of the software development lifecycle, including AI projects. Unlike Jenkins, which requires the integration of external repositories and services, GitLab provides a unified solution for source code management, CI/CD, and monitoring within a single platform. This consolidation simplifies the development process, making it easier for teams to collaborate and manage their projects.

For AI development, GitLab CI/CD’s integrated environment supports the management of machine learning experiments, version control of models, and automation of deployment workflows. It allows data scientists and developers to iterate quickly on their models, automate testing, and ensure that only high-quality code is deployed. The ability to define CI/CD pipelines as code in GitLab’s YAML configuration files enables precise control over the build, test, and deployment processes, tailored to the specific needs of AI applications.

Moreover, GitLab CI/CD promotes the adoption of best practices such as review apps and feature flags, which are particularly useful for testing AI models in isolation and gradually rolling out changes to production. These practices enhance the agility and resilience of AI development workflows, enabling teams to experiment with confidence and rapidly respond to changes without disrupting the user experience.

Ansible

Ansible, an open-source automation tool, excels in simplicity and ease of use, making it a popular choice for automating the deployment and management of applications, including those driven by AI. Ansible uses a declarative language, allowing developers and operations teams to define their infrastructure and deployment processes in human-readable YAML files. This approach simplifies the automation of complex deployment tasks, which is crucial for the often intricate environments required by AI applications.

Ansible’s agentless architecture is another significant advantage. It communicates with nodes over SSH (or PowerShell for Windows), eliminating the need for special agents to be installed on target machines. This makes it easier to manage a wide range of environments, from local development machines to cloud-based production servers, which is essential for deploying and scaling AI applications across diverse infrastructures.

Furthermore, Ansible’s extensive library of modules supports the automation of tasks across various platforms and services, including cloud providers, databases, and monitoring tools. This versatility enables DevOps teams to automate nearly every aspect of their AI application environments, from setting up data pipelines and machine learning workloads to ensuring that the necessary monitoring and logging services are in place. By leveraging Ansible, teams can achieve more reliable and repeatable deployment processes, reduce manual errors, and focus on the core aspects of their AI projects.

Terraform

Terraform by HashiCorp takes infrastructure automation to the next level with its declarative configuration files, enabling developers to define and provision infrastructure across multiple cloud providers using a consistent syntax. For AI projects, which often leverage cloud resources for scalable computing power and data storage, Terraform’s capabilities are invaluable. It allows teams to easily manage the lifecycle of cloud infrastructure required for training and deploying AI models, from creation to deletion, in a predictable and repeatable manner.

Terraform’s support for infrastructure as code (IaC) is especially beneficial for AI applications, as it ensures that the infrastructure provisioning process is transparent, version-controlled, and integrated into the development workflow. This approach minimizes the risks associated with manual infrastructure management and enables rapid scaling or modification of resources in response to the changing needs of AI projects.

Moreover, Terraform’s ability to manage dependencies between infrastructure components simplifies the orchestration of complex cloud environments. This is critical for AI applications that may require a combination of compute instances, storage systems, and networking resources to work together seamlessly. Terraform ensures that these resources are provisioned in the correct order and configured to interact as intended, streamlining the deployment and operation of AI models in the cloud.

Prometheus

Prometheus, a powerful open-source monitoring system, is designed for reliability and efficiency, making it well-suited for monitoring AI applications. With its time-series database and a query language (PromQL), Prometheus allows teams to collect and analyze metrics in real-time, providing insights into the performance and health of AI systems. This real-time monitoring is crucial for detecting and addressing issues promptly, ensuring that AI models continue to perform as expected in production environments.

One of the key features of Prometheus is its support for multi-dimensional data collection, which is particularly useful for monitoring complex AI applications. By tagging metrics with labels, teams can slice and dice the data for detailed analysis, helping to identify trends, bottlenecks, and anomalies in the system’s performance. This level of insight is invaluable for optimizing AI models and infrastructure, leading to more efficient and reliable applications.

Furthermore, Prometheus’s alerting functionality allows teams to define alerts based on specific metrics, ensuring that they are notified of potential issues before they impact users. This proactive monitoring strategy is essential for maintaining high availability and performance of AI applications, as it enables teams to respond quickly to changes in system behavior or performance degradation.

Grafana

Grafana, a leading open-source analytics and monitoring solution, complements Prometheus by providing a powerful and intuitive platform for visualizing time-series data. With Grafana, teams can create customizable dashboards that display key performance indicators (KPIs) and metrics from Prometheus, enabling a clear and comprehensive view of the system’s health and performance. These visualizations are crucial for making informed decisions about optimizing AI applications and infrastructure.

Grafana’s flexibility in integrating with a wide range of data sources, not just Prometheus, makes it a versatile tool for monitoring AI projects. Whether the data comes from cloud services, databases, or custom applications, Grafana can aggregate and visualize it in a unified dashboard. This capability allows teams to monitor all aspects of their AI applications, from the underlying infrastructure to the application performance and user behavior, in a single pane of glass.

Moreover, Grafana’s alerting feature enhances the monitoring ecosystem by allowing users to set up alerts directly from the dashboards. These alerts can be configured to notify teams via email, Slack, or other communication channels when certain thresholds are met, facilitating a quick response to potential issues. By leveraging Grafana’s visualizations and alerting capabilities, teams can maintain a high level of situational awareness regarding their AI applications, leading to better performance, reliability, and user satisfaction.

In the dynamic interplay of software development and artificial intelligence, the fusion of DevOps practices with AI projects has become an indispensable strategy for fostering efficiency, scalability, and breakthrough innovation. From the orchestration and containerization prowess of Kubernetes and Docker, through the streamlined workflows of Jenkins and GitLab CI/CD, to the meticulous infrastructure automation offered by Ansible and Terraform, and the insightful monitoring via Prometheus and Grafana, alongside Airflow’s robust data pipeline management, Argo CD’s continuous delivery efficiency, and JupyterHub’s collaborative computing environment—each technology discussed plays a crucial role in optimizing the AI lifecycle.

By integrating these powerful tools, teams can not only hasten the development and deployment of AI models but also ensure their robust and efficient operation in production, propelling the advancement and application of AI across sectors. As we stand at the brink of a new era in software development, where agility, teamwork, and automation form the backbone of intelligent solutions designed to navigate today’s complex digital challenges, Allierce is here to guide you through this journey. If your organization is looking to leverage these cutting-edge DevOps and AI technologies to drive growth and innovation, get in touch with us at Allierce. Our expertise and tailored solutions can help unlock your project’s potential, ensuring you stay ahead in the rapidly evolving landscape of tech and AI.